Edge computing for real-time processing is increasingly essential as devices proliferate and sensors generate vast streams of data. Traditional centralized architectures struggle to keep pace with the demand for instant insights, especially as latency-sensitive workloads grow. By bringing real-time data processing at the edge closer to the source, organizations can act within milliseconds and reduce dependence on distant clouds. This approach improves reliability, reduces bandwidth needs, and enhances governance by keeping control over data at the edge. To realize these benefits, leaders should align strategy with IoT edge computing, edge AI analytics, and a clear focus on latency reduction with edge computing.

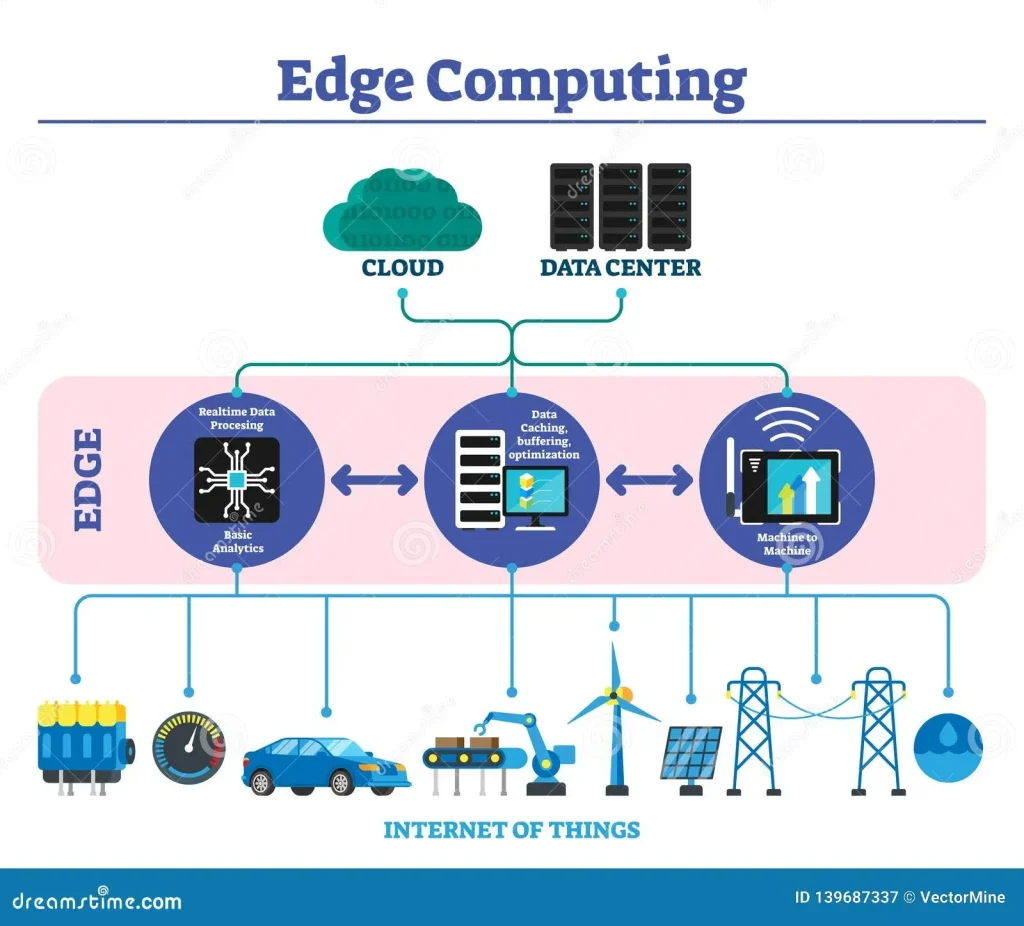

From a practical standpoint, this means distributing computation across devices, gateways, and local data centers rather than sending everything to a central cloud. Practically, you will see near-edge processing and on-device analytics handling sensing streams at the point of origin, with lightweight AI models running on constrained hardware. This shift enables faster decision-making, reduced network congestion, and improved privacy by limiting data exposure. Architectures often combine local micro data centers, fog-like intermediate nodes, and a cloud-edge continuum to balance speed with scalability. Implementing such an approach requires careful governance, robust security, and ongoing observability to ensure reliable results as new devices come online.

Edge computing for real-time processing: foundations, benefits, and strategic value

Edge computing for real-time processing moves computation closer to where data is produced, transforming speed, reliability, and governance in operational environments. By keeping data processing at or near the source, organizations achieve dramatic reductions in end-to-end latency and lower the risk of data exposure while maintaining regulatory compliance. This shift turns what used to be a niche capability into a strategic differentiator across manufacturing, logistics, healthcare, energy, and smart city initiatives.

With edge computing for real-time processing, decisions can be made within milliseconds, enabling proactive maintenance, autonomous control, and safer real-time responses. The approach also reduces bandwidth consumption and cloud dependency, making systems more resilient to connectivity outages. Real-time data processing at the edge can be facilitated by IoT edge computing ecosystems, ensuring data governance policies travel with the data while delivering faster insights on-site.

Real-time data processing at the edge: architectural patterns and data flow

Architectures for real-time data processing at the edge span tiered edge, fog computing, cloud-edge continuum, and containerized microservices. Tiered edge configurations push initial processing to local devices and gateways, forwarding only essential summaries to central systems. This design minimizes traffic, speeds response times, and simplifies data governance by keeping sensitive streams on-site when possible.

Designing the data flow requires careful latency budgeting: sampling time, transit, processing, and actuation must be balanced to meet milliseconds-scale requirements. By streaming data locally and filtering at the source, organizations achieve latency reduction with edge computing while maintaining reliable analytics through regional hubs when needed.

IoT edge computing: enabling connected devices with near-instant insights

IoT edge computing brings processing close to sensors, gateways, and micro data centers deployed in factories, warehouses, and remote sites. By embedding analytics at the edge, devices with limited compute can run real-time inference and filter data before it ever leaves the premises. This proximity reduces reliance on centralized clouds, enables offline operation, and supports privacy-preserving data handling.

It also supports governance around data lifecycles, specifying what stays on the device, what gets summarized, and what is sent for cloud-based analytics. With IoT edge computing, manufacturers and operators can scale across large fleets while maintaining consistent security policies and local resilience.

Edge AI analytics: on-device inference and autonomous decision-making

Edge AI analytics brings machine learning inference and decision-making directly on devices, gateways, or micro data centers. TinyML and hardware accelerators enable sophisticated models to run with constrained compute and memory, dramatically cutting latency and avoiding round-trips to the cloud. This on-device intelligence unlocks real-time anomaly detection, predictive maintenance, and autonomous control at the edge.

By keeping data local during inference, edge AI analytics reduces bandwidth costs and enhances privacy. As models update, organizations can perform A/B testing at the edge to validate improvements without destabilizing central systems, ensuring continuous improvement of edge-enabled insights.

Latency reduction with edge computing: budgets, techniques, and performance metrics

Latency reduction with edge computing starts with a clear latency budget that accounts for sampling time, network transit, processing time, and actuation. By moving the bulk of computation to edge sites, end-to-end latency can drop from tens or hundreds of milliseconds to single-digit milliseconds in many scenarios. This improvement is foundational for real-time control, safety-critical alerts, and dynamic decision-making on the factory floor or in transport networks.

Techniques to optimize latency include efficient data schemas, serialization, aggressive data filtering, local inference, and offline operation when connectivity is sporadic. Teams also implement prioritization of critical streams, local buffering, and near-real-time monitoring to ensure latency targets are met while maintaining data integrity and governance.

Security, governance, and observability in edge-based real-time systems

A robust edge real-time strategy starts with hardware security modules, secure boot, and trusted execution environments to protect models and data while in use. End-to-end encryption and data-at-rest protections guard streams across devices and gateways. Zero-trust access controls, strong identity management, and continuous monitoring help detect anomalies and maintain trust in distributed edge architectures.

Operational excellence requires strong observability: centralized telemetry from devices and gateways, real-time dashboards, and incident response workflows. Governance policies should define what data stays at the edge, what is sent to the cloud, and how long it is retained, ensuring compliance across multiple jurisdictions. This foundation supports scalable edge deployments that remain secure, auditable, and manageable as workloads evolve.

Frequently Asked Questions

What is edge computing for real-time processing and why is latency reduction with edge computing important?

Edge computing for real-time processing is the practice of performing data processing close to where data is produced to deliver instant insights within milliseconds. By keeping compute near sensors, gateways, or local data centers, it minimizes network transit and latency, improves reliability, and enhances governance since sensitive data can stay on premises. This latency reduction with edge computing enables safer, faster decisions in manufacturing, transportation, healthcare, and other sectors.

How does real-time data processing at the edge work in practice?

Real-time data processing at the edge operates by analyzing streams on or near devices, gateways, or local data centers. It uses real-time streaming frameworks, lightweight containers, and AI models optimized for constrained environments, often with offline resilience. This approach returns actionable results quickly and reduces dependence on distant clouds.

Which architectural patterns power edge computing for real-time processing in IoT edge computing environments?

Architectural patterns for IoT edge computing in real-time processing include tiered edge, fog computing, cloud-edge continuum, and containerized microservices. Tiered edge processes data locally and forwards only summaries; fog enables regional near-real-time analytics; cloud-edge continuum blends edge and cloud workloads; containerized microservices simplify deployment at scale.

How does edge AI analytics enhance real-time decision-making at the edge?

Edge AI analytics brings machine learning inference to the edge, enabling real-time anomaly detection, predictive maintenance, and autonomous decisions. TinyML and edge accelerators allow models to run on devices with limited compute, reducing bandwidth use and latency, while preserving privacy by keeping data local.

What security, privacy, and governance considerations apply to edge computing for real-time processing?

Security, privacy, and governance are essential in edge real-time systems. Use hardware security modules, secure boot, and trusted execution environments; enforce end-to-end encryption and data-at-rest protections; adopt zero-trust access controls and continuous monitoring; define data governance policies about what stays at the edge and what moves to the cloud.

What practical steps and best practices should organizations follow when implementing edge computing for real-time processing?

Implementation best practices include: identify latency-sensitive workloads, select appropriate architectural patterns, and invest in portable, lightweight software stacks. Plan for model updates and A/B testing at the edge, automate deployment and monitoring, and enforce clear data lifecycle policies to balance edge processing with cloud analytics.

| Topic | Key Points |

|---|---|

| What is edge computing for real-time processing? | Processing data near its source to reduce latency and bandwidth; enables immediate actions without sending every packet to cloud; essential where delayed insights pose safety, efficiency, or revenue risks. |

| Why real-time processing matters | Real-time processing is a baseline for competitive advantage across industries (manufacturing: predictive maintenance; transport: dynamic routing; healthcare: timely alerts; energy: grid management; retail/smart cities: personalized, safer experiences). |

| Key technology components | Hardware: sensors, microcontrollers, edge servers, rugged data centers; Software: real-time streams, lightweight containers, edge-optimized AI; Networking: 5G/private LTE; offline operation for resilience. |

| Data flow and latency budgets | Latency budget includes sampling, transit, processing, actuation; local processing greatly reduces end-to-end latency; few ms can distinguish timely anomaly detection; design data lifecycles and efficient schemas. |

| Architectural patterns | Tiered edge; Fog computing; Cloud-edge continuum; Containerized microservices. |

| Edge AI and analytics | Edge AI enables real-time anomaly detection, predictive maintenance, and autonomous decisions; TinyML enables on-device inference; reduces latency and cloud dependency, improving privacy. |

| Security, privacy, governance | Hardware security modules, secure boot, trusted execution environments; encryption at rest/in transit; zero-trust access, strong identity management, continuous monitoring; clear data governance policies for what stays at the edge vs what goes to cloud. |

| Operational excellence and observability | Centralized telemetry and monitoring; real-time dashboards, alerts, and incident response; metrics on latency, throughput, and errors; policy management and AI model lifecycle management. |

| Real-world use cases and impact | Manufacturing: detect wear, trigger preventive actions; Logistics: optimize routes and delivery windows; Healthcare: rapid critical alerts; Smart cities: traffic optimization and sensing with higher reliability. |

| Implementation considerations and best practices | Prioritize latency-sensitive workloads; choose suitable architectural pattern; use lightweight portable stacks; plan for updates and A/B testing; automate deployment/monitoring/remediation; define data lifecycle policies. |

| Cost considerations and ROI | Potential savings from reduced bandwidth and cloud compute plus lower downtime; initial investment in edge hardware/software; ROI from faster decisions and better efficiency; adopt a phased approach with quick wins. |

| The future of edge real-time processing | 5G and beyond enable ultra-low latency; cloud-edge continuum enables unified orchestration and governance; edge AI acceleration and open standards will broaden access and reduce costs. |

Summary

Edge computing for real-time processing is a strategic shift that brings data processing to the data source, enabling faster decisions, increased reliability, and stronger data governance. By adopting the right architectural patterns, integrating edge AI, and prioritizing latency reduction along with robust security, organizations can unlock substantial business value across manufacturing, logistics, healthcare, and smart cities.